Statistics 4.2 is a commonly used software program that is designed to help analyze and interpret data. It is widely used in the fields of business, science, and social sciences to make informed decisions based on data. However, like any software program, it can be complex and require a certain level of expertise to use effectively.

In this article, we will provide answers to some common questions that users may have about Statistics 4.2. Whether you are a beginner or an experienced user, these answers will help you navigate through the program and make the most of its features. We will cover topics such as data input, data manipulation, statistical tests, and data visualization.

One of the first questions users often have is how to input their data into the program. Statistics 4.2 offers multiple ways to do this, including manual entry, importing data from external sources, and linking to databases. We will explain the pros and cons of each method, as well as provide step-by-step instructions on how to perform each one.

Overview of Statistics 4.2

In Statistics 4.2, we delve deeper into the world of statistics and explore advanced statistical methods and techniques. This course builds upon the foundational knowledge gained in previous statistics courses and takes a more comprehensive approach to data analysis and interpretation.

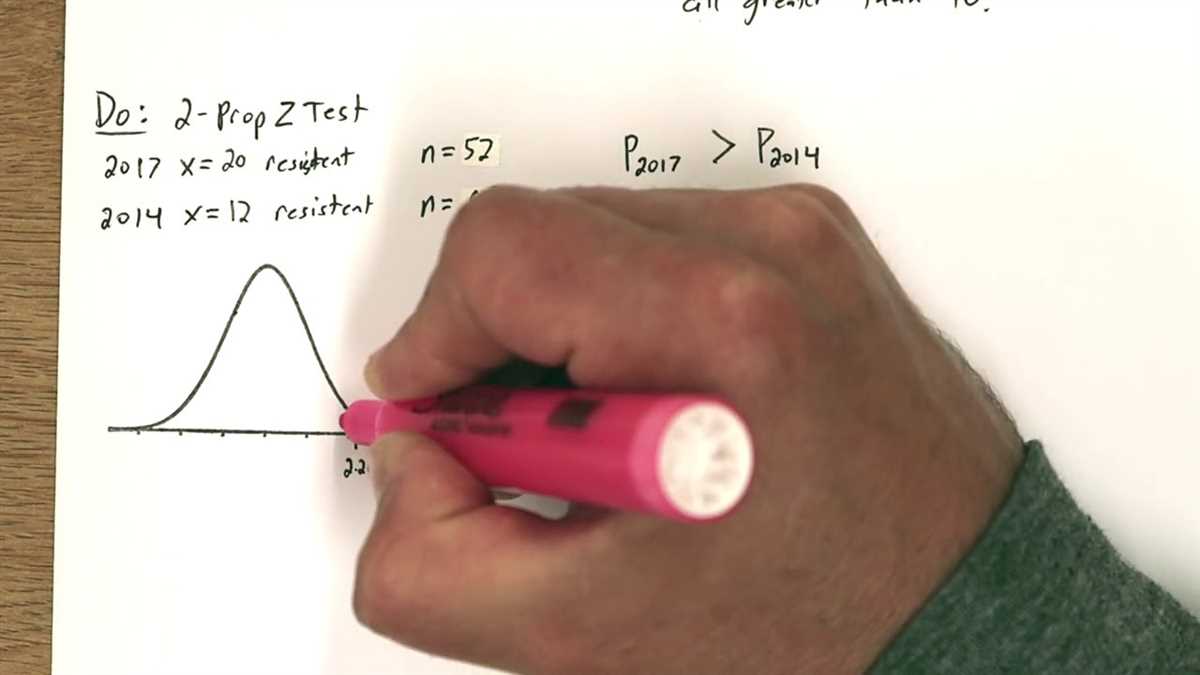

One of the key topics covered in this course is hypothesis testing. We learn how to formulate null and alternative hypotheses, select an appropriate test statistic, and interpret the results. Additionally, we explore various types of hypothesis tests, including z-tests, t-tests, chi-square tests, and analysis of variance (ANOVA).

Another important aspect of Statistics 4.2 is the concept of regression analysis. We examine linear regression models and learn how to estimate and interpret regression coefficients, assess model fit, and make predictions based on the regression equation. We also explore multiple regression, where we analyze the relationship between several predictors and a single outcome variable.

In addition to hypothesis testing and regression analysis, this course covers other advanced statistical techniques such as categorical data analysis, time series analysis, and nonparametric statistics. We explore methods for analyzing categorical variables, detecting patterns and trends in time series data, and conducting statistical tests without making assumptions about the underlying population distribution.

Throughout the course, we use statistical software to analyze real-world datasets and gain hands-on experience in data analysis. This allows us to apply the concepts and techniques learned in a practical setting, further enhancing our understanding of statistical methods and their applications.

Key Topics Covered in Statistics 4.2:

- Hypothesis testing

- Regression analysis

- Categorical data analysis

- Time series analysis

- Nonparametric statistics

What is Statistics 4.2

Statistics 4.2 is a statistical software program that is widely used for data analysis and visualization. It provides a range of tools and techniques for working with numerical and categorical data, making it an essential tool for researchers, analysts, and data scientists.

One of the key features of Statistics 4.2 is its ability to perform various statistical analyses, such as descriptive statistics, hypothesis testing, regression analysis, and ANOVA. These analyses allow users to uncover patterns and relationships in data, make informed decisions, and draw meaningful conclusions.

The software also offers a user-friendly interface that makes it easy to import and manipulate data. Users can import data from various sources, such as spreadsheets and databases, and perform data cleaning and preparation tasks. Additionally, Statistics 4.2 provides a wide range of data visualization options, including charts, graphs, and tables, to help users present their findings in a clear and concise manner.

Furthermore, Statistics 4.2 supports programming and automation through its built-in programming language, allowing users to automate repetitive tasks, create custom analyses, and extend the functionality of the software. This makes it a powerful tool for handling large and complex datasets.

In summary, Statistics 4.2 is a versatile software program that provides a comprehensive set of tools for statistical analysis, data visualization, and data management. Its ease of use, advanced features, and robust capabilities make it an essential tool for anyone working with data.

Features of Statistics 4.2

Statistics 4.2 is a comprehensive statistical software package that offers a wide range of features and tools for data analysis. With its intuitive interface and powerful capabilities, it is an invaluable tool for researchers, statisticians, and data scientists.

Data Visualization: One of the key features of Statistics 4.2 is its ability to create visually appealing and interactive charts, graphs, and plots. Whether you need to present your findings in a professional presentation or explore patterns in your data, Statistics 4.2 provides a variety of options for data visualization.

Data Analysis: Statistics 4.2 offers a wide range of statistical analysis techniques, from basic descriptive statistics to advanced modeling and forecasting. You can perform regression analysis, t-tests, ANOVA, factor analysis, and more, all with just a few clicks.

Automation and Reproducibility: With Statistics 4.2, you can automate your data analysis tasks and create reproducible workflows. The software provides a scripting language that allows you to write custom scripts and automate repetitive tasks. This not only saves time but also ensures the reproducibility of your analysis.

Data Management: Statistics 4.2 allows you to efficiently manage your data by providing features for importing, cleaning, and organizing your datasets. You can easily merge datasets, handle missing values, and create new variables based on your data. The software also supports a wide range of file formats, making it easy to work with data from different sources.

Collaboration and Sharing: Statistics 4.2 supports collaboration and sharing of analysis results. You can easily export your graphs and tables to various file formats such as PDF, Excel, and Word. Additionally, the software allows you to generate dynamic reports that can be shared with others, making it easier to communicate your findings with colleagues or clients.

Data Mining and Machine Learning: Another notable feature of Statistics 4.2 is its support for data mining and machine learning. The software provides algorithms for clustering, classification, and prediction, allowing you to uncover patterns and insights in your data. Whether you’re analyzing customer behavior or predicting sales, Statistics 4.2 has the tools you need.

Overall, Statistics 4.2 is a powerful and versatile statistical software package that offers a wide range of features for data analysis, visualization, and modeling. Whether you are a beginner or an experienced statistician, Statistics 4.2 can help you make sense of your data and uncover valuable insights.

Data analysis

Data analysis is the process of inspecting, cleaning, transforming, and modeling data in order to discover useful information, draw conclusions, and support decision-making. It involves various techniques, tools, and methodologies that aim to extract relevant insights from raw data. In today’s data-driven world, data analysis has become an essential skill for businesses and organizations across all industries.

Data analysis can be performed using different statistical methods and approaches. Descriptive statistics is often the first step in data analysis, where data is summarized and visualized using measures such as mean, median, mode, and standard deviation. This helps in understanding the basic characteristics of the data and identifying any patterns or trends.

Hypothesis testing is another important aspect of data analysis. It involves formulating a specific hypothesis, collecting relevant data, and using statistical tests to determine whether the data provides enough evidence to support or reject the hypothesis. This helps in making informed decisions and drawing reliable conclusions based on empirical evidence.

Data analysis also involves data visualization techniques, which help in representing data in a visual form such as charts, graphs, and maps. Visualizations enhance the understanding of complex data sets and facilitate the communication of findings to stakeholders. Tools like Microsoft Excel, Tableau, and Python libraries like Matplotlib and Seaborn are commonly used for data visualization.

In addition to these techniques, regression analysis is often used in data analysis to quantify the relationship between variables and make predictions. It helps in determining the strength and direction of the relationship between a dependent variable and one or more independent variables.

Overall, data analysis plays a crucial role in driving informed decision-making and solving complex problems. It allows businesses and organizations to gain insights from data, identify opportunities for improvement, and make data-driven decisions that can lead to improved performance and success.

Data Visualization

Data visualization is a powerful tool in statistics that allows us to represent data in a visual format. It helps us to understand complex data sets and identify patterns, trends, and relationships between variables. Effective data visualization can simplify complex information and make it easier to interpret and communicate to others.

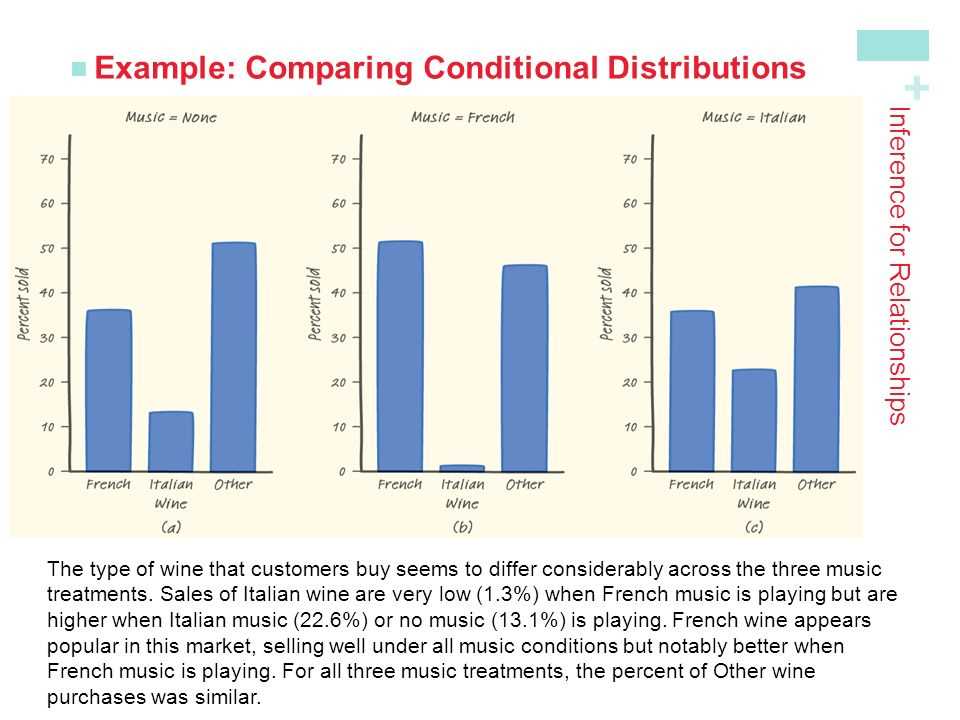

There are various types of data visualizations that can be used depending on the nature of the data and the insights we want to gain. Some common types include charts, graphs, maps, and infographics. Each type has its own strengths and can be more suitable for displaying certain types of data. For example, line graphs are often used to show trends over time, while bar charts are useful for comparing values between different categories.

When creating data visualizations, it’s important to consider the audience and the purpose of the visualization. The visual design should be clear, concise, and visually appealing, with appropriate labeling and color choices. It’s also important to choose the right type of visualization to effectively communicate the main message and insights of the data.

Data visualization plays a crucial role in exploratory data analysis, as it helps to uncover hidden patterns and correlations in the data. It can also be used in presentations and reports to effectively communicate findings and insights. Overall, data visualization is an essential tool for anyone working with data, as it allows us to see and understand patterns and trends that may not be immediately obvious in raw data.

Statistical Modeling

Statistical modeling is the process of creating mathematical models that represent relationships between variables in a dataset. These models are used to make predictions, analyze data, and understand underlying patterns and relationships. Statistical modeling plays a crucial role in various fields, including economics, finance, biology, social sciences, and engineering.

In statistical modeling, data is carefully collected and analyzed to identify the variables of interest and their relationships. The process involves selecting an appropriate model, estimating its parameters, and testing its validity and accuracy. The models can be simple or complex, depending on the complexity of the dataset and the nature of the relationships being studied.

Types of statistical models include linear regression, logistic regression, time series analysis, survival analysis, and hierarchical models. These models use mathematical equations and statistical techniques to estimate and interpret the relationships between variables. They can be used to predict future outcomes, identify risk factors, evaluate interventions, and understand the impact of different variables on a target variable.

In addition to traditional statistical modeling techniques, advanced methods such as machine learning algorithms and Bayesian modeling are gaining popularity. These techniques can handle complex datasets with high-dimensional data and non-linear relationships. They allow for more flexible and accurate modeling, especially in fields like data science and artificial intelligence.

Overall, statistical modeling is a powerful tool for analyzing and interpreting data. It provides a systematic approach to understanding relationships and making predictions based on data. It enables researchers and analysts to gain insights, make informed decisions, and solve complex problems in a wide range of disciplines.

Benefits of using Statistics 4.2

Statistics 4.2 is a powerful statistical software that offers several benefits for data analysis and decision-making. Its comprehensive set of features and intuitive interface make it an ideal tool for professionals, researchers, and students.

1. Data visualization: Statistics 4.2 provides various visualization tools to represent data in a more understandable format. With interactive charts, graphs, and plots, users can easily identify patterns, trends, and outliers in their datasets. This visual representation aids in better understanding the data and making informed decisions based on the analysis.

2. Statistical analysis: The software offers a wide range of statistical techniques and tests to analyze data. These include hypothesis testing, regression analysis, analysis of variance (ANOVA), and many more. Users can perform advanced statistical modeling and explore relationships between variables, helping them gain insights and make evidence-based decisions.

3. Data management: Statistics 4.2 provides powerful data management capabilities. Users can import, clean, and transform data from various sources, ensuring data integrity and consistency. The software also supports data merging, subsetting, and variable recoding, allowing users to manipulate and prepare their data for analysis efficiently.

4. Reproducibility: Statistics 4.2 enables users to create reproducible analyses. The software allows users to document their analysis steps, including data preprocessing, statistical methods used, and results obtained. This documentation ensures transparency and facilitates collaboration, as others can easily replicate and verify the analysis.

5. Performance and scalability: Statistics 4.2 is designed to handle large datasets and complex analyses efficiently. It can process and analyze massive amounts of data within a reasonable time frame, providing faster results for time-sensitive projects. The software also supports parallel computing, utilizing the computational power of multicore processors for quicker analysis.

Overall, Statistics 4.2 offers a wide range of benefits for those involved in data analysis and decision-making. Its data visualization, statistical analysis, data management, reproducibility features, and performance capabilities make it a valuable tool for various industries and academic disciplines.

Improved data accuracy

Data accuracy is crucial in statistical analysis as it directly impacts the reliability and validity of the findings. Inaccurate data can lead to flawed results and misinterpretations. However, with the advancement of technology and improved data collection methods, there are several ways to enhance data accuracy.

Data validation: Implementing data validation techniques can help identify and prevent errors during data entry. This can include setting limits, conducting range checks, and implementing data validation rules to ensure all entered data is within the expected parameters.

Data cleaning: Data cleaning involves reviewing and correcting errors, inconsistencies, and inaccuracies in the dataset. This process can include removing duplicate entries, correcting formatting errors, and resolving discrepancies.

Automated data collection: Automating the data collection process can significantly reduce human errors. By utilizing electronic data capture methods, such as online surveys or automated sensors, the risk of data entry errors is minimized.

Training and education: Providing proper training to data collectors and analysts is essential to improve data accuracy. This includes educating them about the importance of accurate data, teaching proper data collection techniques, and familiarizing them with data validation and cleaning processes.

Overall, ensuring data accuracy is essential for producing reliable and valid statistical analysis. Implementing data validation, data cleaning, automated data collection methods, and providing training and education to data collectors can significantly improve data accuracy in statistical analysis.