AP Stats: Modeling the World is a comprehensive introductory statistics course designed to prepare students for the Advanced Placement (AP) exam. This course explores various statistical concepts and methodologies through real-world examples and data analysis. Understanding the answers to the questions and problems posed in this course is crucial for students to develop a solid foundation in statistics.

Throughout the course, students are exposed to a wide range of statistical models and techniques, including descriptive statistics, probability, hypothesis testing, and regression analysis. By applying these models to real-world situations, students gain a deeper understanding of how statistics can be used to make informed decisions and draw meaningful conclusions.

Ap Stats: Modeling the World provides students with the opportunity to develop critical thinking and problem-solving skills, as they are required to interpret data, analyze trends, and communicate their findings effectively. The course emphasizes the importance of statistical literacy and the ability to critically evaluate data and statistical claims.

With the complexity of statistical analysis increasing in today’s data-driven world, having a strong foundation in statistics is essential for success in various fields, including business, healthcare, social sciences, and engineering. Therefore, having access to accurate and reliable answers to the questions and problems posed in Ap Stats: Modeling the World is crucial for students to excel in their studies and future careers.

Overview of the AP Stats course and its objectives

The AP Statistics course is designed to provide students with a framework for understanding statistical concepts and methods in order to analyze data, make informed decisions, and critically evaluate claims and conclusions. The course covers a wide range of topics, including exploring data, sampling and experimentation, probability, and statistical inference.

One of the main objectives of the AP Stats course is to develop students’ ability to effectively collect, analyze, and interpret data. Students learn how to design studies and surveys, construct and interpret graphical displays of data, and calculate and interpret summary statistics. They also learn about the importance of sampling methods and the potential sources of bias in data collection.

- Exploring data: Students learn how to describe, organize, and display data using graphical and numerical techniques. They also learn how to identify patterns and relationships in data sets and how to use data to make predictions.

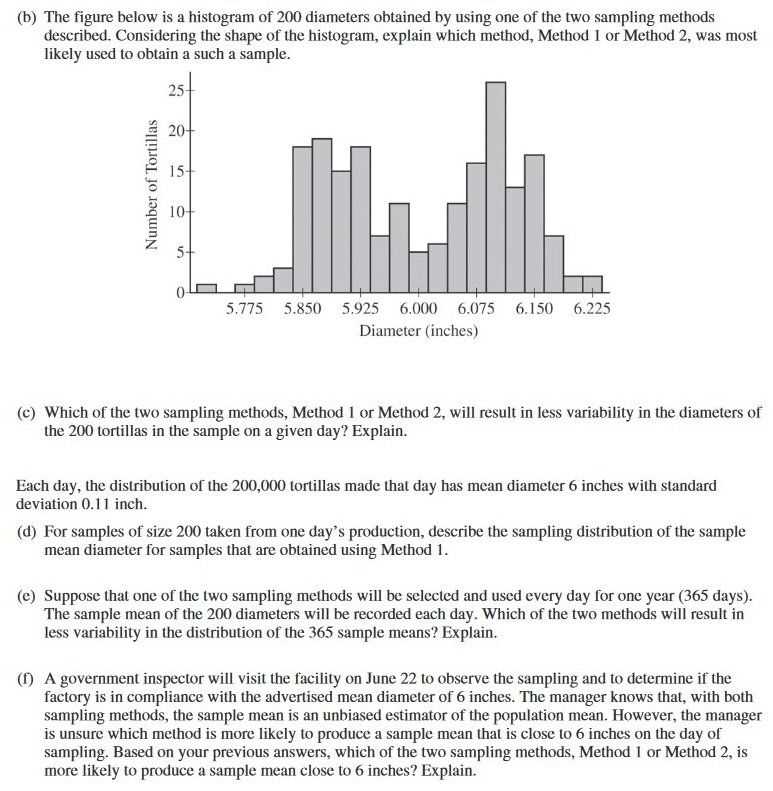

- Sampling and experimentation: Students learn the principles of sampling and experimentation, including how to design studies and surveys, select appropriate samples, and analyze and interpret the results.

- Probability: Students learn about the fundamentals of probability, including basic probability concepts, random variables, and probability distributions. They also learn how to calculate and interpret probabilities in various contexts.

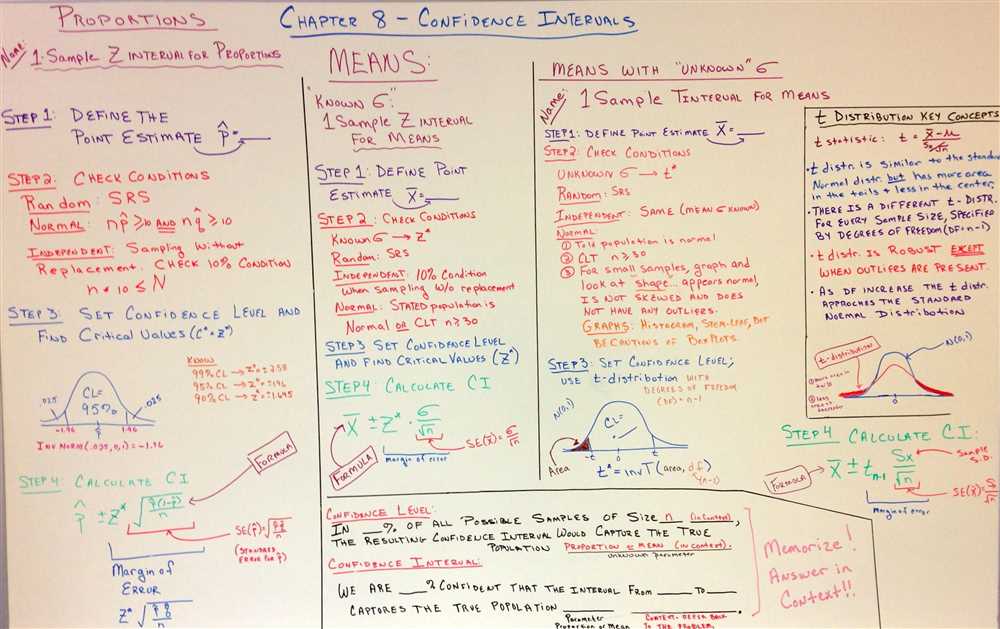

- Statistical inference: Students learn how to use sample data to make inferences about population parameters. They learn about confidence intervals, hypothesis tests, and the importance of understanding the limitations and assumptions of statistical inference.

Overall, the AP Stats course aims to equip students with the knowledge and skills necessary to analyze data, make informed decisions, and evaluate the validity of claims and conclusions. By the end of the course, students should have a solid understanding of statistical concepts and methods, as well as the ability to apply these concepts to real-world scenarios.

Understanding Statistics and Data Analysis

In today’s world, where information is abundant and readily available, the ability to understand and analyze data is becoming increasingly important. Statistics and data analysis provide us with the tools and techniques to make sense of complex data sets and draw meaningful conclusions.

Statistics is the science of collecting, organizing, analyzing, and interpreting data. It involves the use of mathematical methods to summarize and describe data, as well as to make inferences and draw conclusions from the data. Data analysis, on the other hand, is the process of inspecting, cleaning, transforming, and modeling data to uncover useful information, draw conclusions, and support decision-making.

When we analyze data, we are essentially looking for patterns, trends, and relationships within the data. This can involve calculating and interpreting measures of central tendency and variability, conducting hypothesis tests to determine the significance of results, and fitting models to the data to make predictions or draw inferences.

Statistics and data analysis are used in a wide range of fields, including business, finance, healthcare, social sciences, and engineering. They help us make informed decisions, identify outliers and anomalies, detect trends, and predict future outcomes. Whether you are a researcher, a business professional, or a student, understanding statistics and data analysis can greatly enhance your ability to make evidence-based decisions and solve complex problems.

Overall, statistics and data analysis play a crucial role in helping us make sense of the vast amount of information available to us. They provide us with the tools and techniques to analyze and interpret data, uncover patterns and trends, and make informed decisions. By understanding statistics and data analysis, we can unlock valuable insights and knowledge that can drive innovation, improve processes, and lead to better outcomes.

Explaining the importance of statistics in the real world and different data analysis techniques

Statistics play a crucial role in understanding and interpreting the world around us. In various fields such as economics, healthcare, social sciences, and even sports, statistics provide insights, make predictions, and support decision-making processes. With data becoming increasingly available, the ability to analyze and interpret it accurately has become an essential skill.

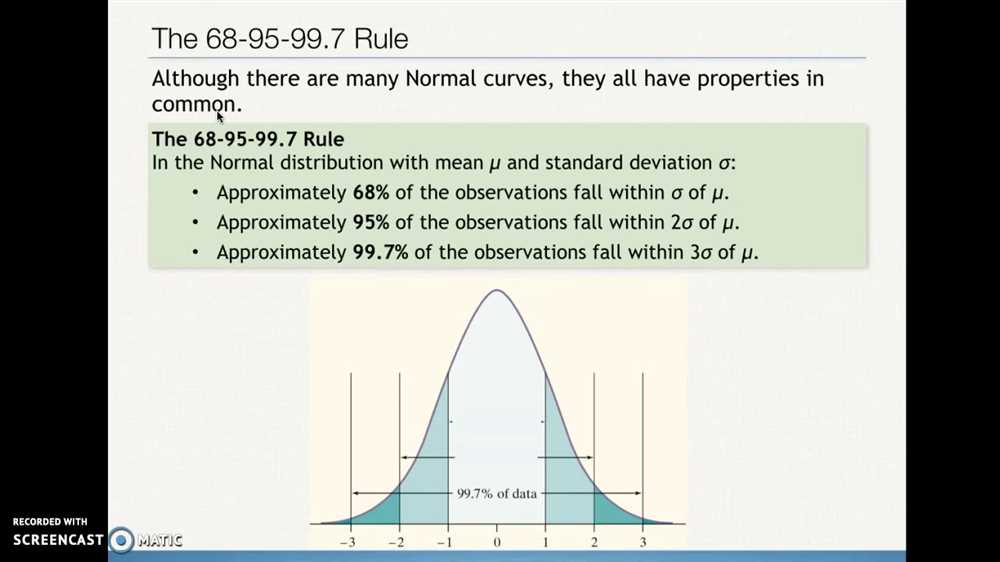

One of the fundamental reasons why statistics are important is their ability to summarize and describe complex data sets. Through techniques like measures of central tendency, such as mean and median, and measures of dispersion, such as range and standard deviation, statisticians can present key information about a dataset in a concise and meaningful way. This provides stakeholders with a better understanding of the data and facilitates decision-making.

A common data analysis technique is hypothesis testing. This process involves formulating a hypothesis about a population parameter, collecting data, and using statistical tests to determine if the evidence supports or contradicts the hypothesis. By analyzing the data using techniques like t-tests or chi-square tests, statisticians can draw conclusions and make informed decisions based on evidence rather than assumptions.

In addition to hypothesis testing, regression analysis is another important tool in data analysis. It allows us to determine the relationship between an independent variable and a dependent variable. Through techniques like simple linear regression or multiple regression analysis, statisticians can identify patterns, make predictions, and understand the impact of different variables on the outcome of interest. This information is valuable in a wide range of fields, from predicting sales based on advertising expenditure to understanding the effect of education on income levels.

Overall, statistics and data analysis techniques enable us to make sense of the world and make informed decisions based on evidence. Without these tools, we would be left to rely on intuition or anecdotal evidence, which may lead to biased or incorrect conclusions. The ability to analyze and interpret data accurately and objectively is increasingly valued in today’s data-driven world.

Basic Concepts in Probability

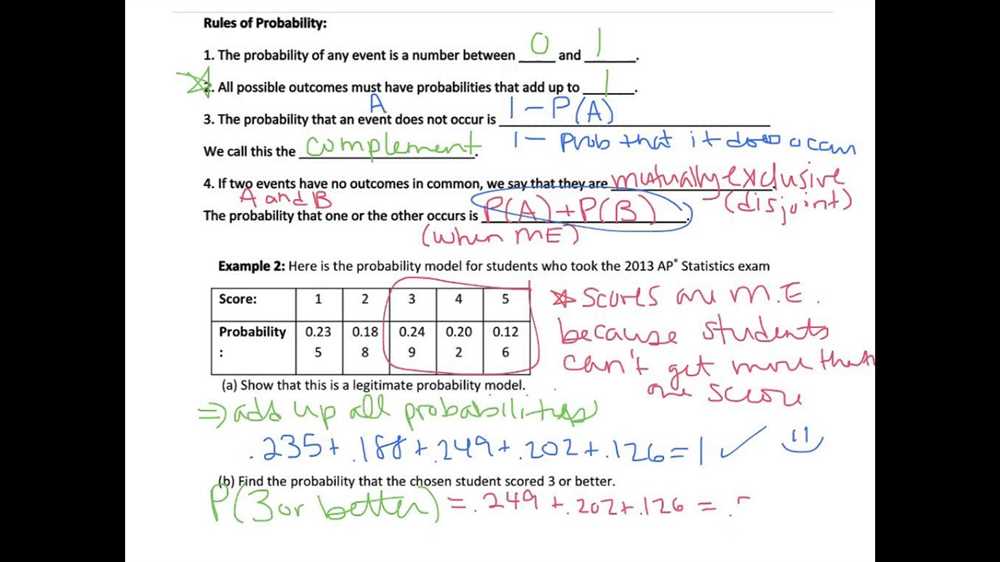

The field of probability theory is concerned with analyzing and predicting the likelihood of events occurring. Probability is a measure of uncertainty, and it plays a critical role in various fields, including statistics, economics, and engineering. In order to understand probability, it is important to grasp some basic concepts.

Random Experiment: A random experiment is a process or procedure that leads to different outcomes. For example, tossing a coin, rolling a die, or conducting a survey are all random experiments. The outcomes of a random experiment are uncertain and cannot be predicted with certainty.

Sample Space: The sample space, denoted by S, is the set of all possible outcomes of a random experiment. For instance, when rolling a die, the sample space would be S = {1, 2, 3, 4, 5, 6}. It is important to note that the sample space contains all possible outcomes, including those that may be unlikely or impossible.

Event: An event is any subset of the sample space. It represents a particular outcome or combination of outcomes. Events can be categorized as simple events, compound events, or the null event. A simple event consists of a single outcome, while a compound event consists of multiple outcomes. The null event, also known as the empty event, represents an event that cannot occur.

For example, when rolling a die, the event of rolling an even number can be represented by the set {2, 4, 6}, while the event of rolling a 7 would be the null event.

Probability: Probability is a numerical measure of the likelihood of an event occurring. It is expressed as a value between 0 and 1, where 0 represents an impossible event and 1 represents a certain event. The probability of an event can be calculated by dividing the number of favorable outcomes by the total number of possible outcomes.

Independent and Dependent Events: In probability, events can be classified as independent or dependent. Independent events are events that do not affect each other’s probabilities. For example, flipping a coin twice, with each flip being independent, would result in the same probability of getting heads or tails. On the other hand, dependent events are events that are influenced by each other. The probability of a dependent event occurring would depend on the occurrence of a previous event.

Overall, understanding these basic concepts in probability provides a foundation for analyzing and interpreting uncertain events and outcomes. By applying these concepts, individuals can make informed decisions and evaluate the likelihoods of various scenarios.

Exploring Sampling and Experimentation

Sampling and experimentation are essential components of the statistical modeling process. They allow us to collect data and make inferences about a population based on a sample. Understanding how to properly sample and conduct experiments is crucial for obtaining valid and reliable results.

In statistics, a sample is a subset of individuals or observations from a larger population. The process of sampling involves selecting individuals from the population in a way that ensures representativeness. This means that the characteristics of the sample should be similar to those of the population it is meant to represent. Various sampling methods, such as simple random sampling, stratified sampling, and cluster sampling, can be used to achieve this representativeness.

Experimentation, on the other hand, involves manipulating one or more variables to observe their effects on the outcome of interest. This allows researchers to establish cause-and-effect relationships between variables. In an experiment, individuals or observations are usually assigned to different treatment groups, and their responses are measured and compared. Random assignment to treatment groups helps to create comparable groups and reduce bias.

Sampling and experimentation both involve uncertainty, and statistical methods are used to quantify and manage this uncertainty. Sample statistics, such as means or proportions, can be used to estimate population parameters, and confidence intervals can be constructed to measure the precision of these estimates. Similarly, hypothesis testing can be used to determine whether the observed differences between treatment groups are statistically significant or simply due to random variation.

Overall, sampling and experimentation are powerful tools for understanding and modeling the world around us. By properly collecting and analyzing data, we can make informed decisions and draw conclusions that are based on evidence and statistical reasoning. These concepts form the foundation of statistical modeling, and mastering them is essential for conducting rigorous research and making sound statistical inferences.

Overview of Sampling Techniques, the Design of Experiments, and Analyzing Experimental Data

Sampling techniques, the design of experiments, and analyzing experimental data are crucial components of statistical analysis. These methodologies allow researchers to draw meaningful conclusions from a sample dataset that can be generalized to a larger population. By carefully selecting a sample that represents the population of interest, researchers can make inferences about the population as a whole.

Sampling techniques include simple random sampling, stratified sampling, cluster sampling, and systematic sampling. Simple random sampling involves selecting individuals from a population at random, ensuring that every individual has an equal chance of being chosen. Stratified sampling divides the population into homogeneous groups, or strata, ensuring representation from each group. Cluster sampling involves dividing the population into clusters and randomly selecting whole clusters for analysis. Systematic sampling involves selecting individuals from a population at regular intervals, such as every nth person.

The design of experiments involves carefully planning and controlling the variables in a study to ensure valid and reliable results. Researchers use techniques such as random assignment, control groups, and replication to minimize bias and confounding variables. Random assignment involves randomly assigning participants to different experimental conditions to ensure equal representation. Control groups are used as a comparison group to measure the effect of the independent variable. Replication involves repeating the study to confirm the results.

Analyzing experimental data involves using statistical techniques to draw conclusions from the data collected during an experiment. Descriptive statistics, such as measures of central tendency and variability, provide a summary of the data. Inferential statistics allow researchers to make inferences about the population based on the sample data. Techniques such as hypothesis testing, confidence intervals, and regression analysis help researchers determine the significance of their findings and make predictions about the population.

Sampling Techniques:

- Simple random sampling

- Stratified sampling

- Cluster sampling

- Systematic sampling

The Design of Experiments:

- Random assignment

- Control groups

- Replication

Analyzing Experimental Data:

- Descriptive statistics

- Inferential statistics

- Hypothesis testing

- Confidence intervals

- Regression analysis