Welcome to Chapter 1 of our data analysis series! In this chapter, we will be diving into the exciting world of analyzing one variable data. Understanding and interpreting data is a critical skill in today’s data-driven world, and this chapter will provide you with the necessary tools and knowledge to effectively analyze and make sense of one variable data.

As we delve into this chapter, we will introduce you to key concepts and techniques that will help you uncover patterns, trends, and insights from a single variable. Whether you are working with numerical data or categorical data, the principles and methods covered in this chapter are applicable across various fields and disciplines.

Throughout this chapter, we will explore different ways to summarize and describe one variable data, including measures of central tendency such as mean, median, and mode, as well as measures of spread like range, variance, and standard deviation. We will also delve into graphical representations of data, such as histograms, box plots, and stem-and-leaf plots, which can provide visual insights into the data distribution and help identify any outliers or unusual patterns.

By the end of this chapter, you will have a solid foundation in analyzing one variable data and be equipped with the skills to extract meaningful information from datasets. So let’s dive in and unlock the power of data analysis in Chapter 1!

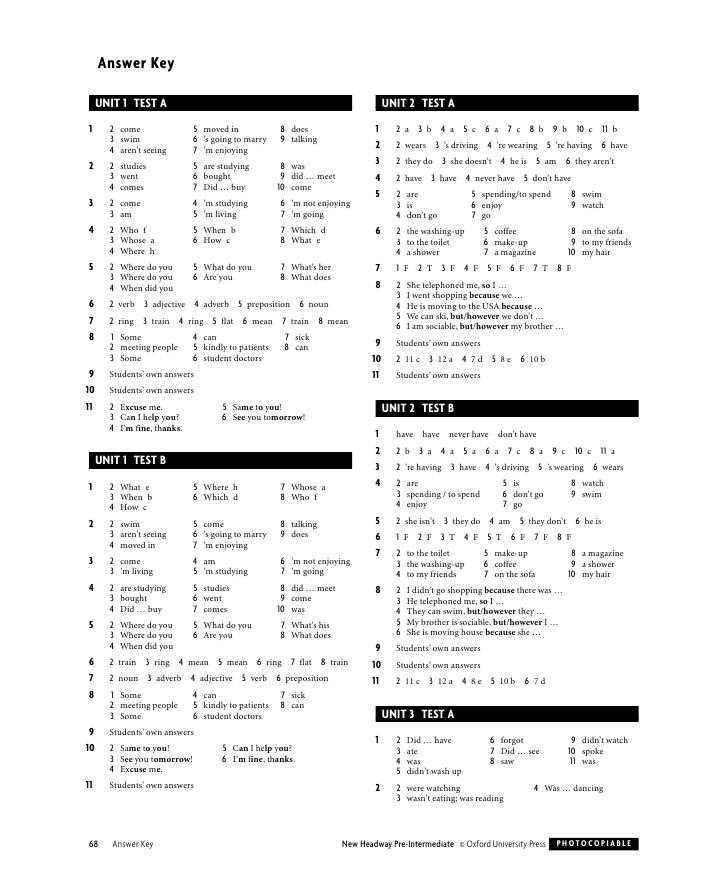

Chapter 1 Analyzing One Variable Data Answer Key

In Chapter 1 of our data analysis course, we focused on analyzing one variable data. This involved studying different statistical measures and techniques to understand and interpret data sets. The purpose of this chapter was to provide students with the necessary skills to analyze and draw meaningful conclusions from data.

Throughout the chapter, we covered various topics such as measures of central tendency, including mean, median, and mode. We also explored measures of dispersion, such as range and standard deviation, to understand the spread of data values. Students learned how to calculate these measures and interpret their significance in relation to the data set.

Additionally, we delved into graphical representations of data, including histograms, bar charts, and box plots. These visuals allowed students to visualize the distribution of data and identify any outliers or patterns within the data set. In combination with the numerical measures, students gained a comprehensive understanding of the data.

The answer key for Chapter 1 provided students with the correct solutions for the exercises and problems given throughout the chapter. This helped students to check their work and verify their understanding of the concepts. Additionally, the answer key served as a valuable tool for review and self-assessment, allowing students to identify any areas they may need to revisit or seek further clarification on.

By the end of Chapter 1, students should have developed a strong foundation in analyzing one variable data. They should be able to calculate and interpret measures of central tendency and dispersion, as well as create and interpret graphical representations of data. This knowledge will serve as a stepping stone for the upcoming chapters, where we will dive deeper into more complex data analysis techniques.

Section 2: Descriptive Statistics

In this section, we will explore the concept of descriptive statistics, which involves summarizing and organizing data in order to gain insights and make conclusions. Descriptive statistics provide a clear and concise way to understand the characteristics and patterns of a dataset.

Measures of central tendency: One aspect of descriptive statistics is the calculation of measures of central tendency, which represent the typical or average value of a dataset. The most common measures of central tendency are the mean, median, and mode. The mean is calculated by summing all the values in the dataset and dividing by the number of observations. The median is the middle value when the dataset is ordered from least to greatest, and mode is the value that appears most frequently.

Measures of dispersion: Descriptive statistics also include measures of dispersion, which quantify the spread or variability of the data. Common measures of dispersion include the range, variance, and standard deviation. The range is the difference between the highest and lowest values in the dataset. Variance measures the average distance between each data point and the mean, while standard deviation is the square root of the variance.

Graphical representations: In addition to numerical summaries, descriptive statistics can also be communicated through visual representations. Graphs and charts, such as histograms, pie charts, and scatter plots, provide a visual depiction of the data distribution and patterns. These visual representations can enhance our understanding of the data and enable us to identify trends or outliers.

Summary: Descriptive statistics is a fundamental tool in data analysis. It allows us to summarize and organize data, calculate measures of central tendency and dispersion, and visually represent data patterns. By utilizing descriptive statistics techniques, we can better understand the characteristics and behavior of a dataset, and consequently make more informed decisions or draw meaningful conclusions.

Section 3: Measures of Central Tendency

In statistics, measures of central tendency are used to describe the center, or average, of a data set. There are three main measures of central tendency: the mean, the median, and the mode. Each of these measures provides different information about the data set and can be useful in different situations.

The mean, often referred to as the average, is calculated by adding up all the values in a data set and dividing by the number of values. The mean is sensitive to extreme values, so it may not accurately represent the center of the data if there are outliers. However, it is often used in situations where the data is normally distributed.

The median is the middle value in a data set when the values are arranged in order. If there is an even number of values, the median is the average of the two middle values. The median is not affected by extreme values, so it can be a more robust measure of central tendency than the mean. It is often used when the data is skewed or there are outliers.

The mode is the value that appears most frequently in a data set. There can be one mode, multiple modes, or no mode at all. The mode is useful when you want to find the most common value in a data set. However, it may not accurately represent the center of the data if there are multiple values with similar frequencies.

While the mean, median, and mode are all measures of central tendency, it is important to consider the characteristics of the data set and the goals of the analysis when choosing which measure to use. Each measure provides different insights into the data and can be used to answer different questions about the distribution of values.

Section 4: Measures of Dispersion

In statistics, measures of dispersion are used to describe the spread or variability of a data set. They provide information about how the individual data points are spread out around the central tendency. Two commonly used measures of dispersion are the range and the standard deviation.

The range is the simplest measure of dispersion and is calculated by subtracting the smallest value from the largest value in the data set. It provides an indication of the total spread of the data. However, it is sensitive to outliers and can be heavily influenced by extreme values.

The standard deviation is a more robust measure of dispersion that takes into account all the data points. It measures the average distance between each data point and the mean. A low standard deviation indicates that the data points are close to the mean, while a high standard deviation indicates that the data is more spread out. The standard deviation is widely used in inferential statistics and is often preferred over the range.

These measures of dispersion are essential for understanding the variability within a data set. They provide important insights into the nature of the data and help in making inferences and predictions. When analyzing one-variable data, calculating and interpreting these measures of dispersion can help identify any unusual or extreme observations and provide a clearer picture of the overall distribution.

Measures of Dispersion Summary:

- The range is the simplest measure of dispersion, calculated as the difference between the largest and smallest values in the data set.

- The standard deviation measures the average distance between each data point and the mean.

- The standard deviation is a more robust measure of dispersion and is widely used in inferential statistics.

- Both measures provide insights into the variability within a data set and aid in making inferences and predictions about the data.

The section on measures of dispersion is an important component of analyzing one-variable data. By understanding how the data points are spread out around the central tendency, researchers and analysts can gain a deeper understanding of the data and make more informed decisions based on their findings.

Section 5: Graphical Representation of Data

In this section, we will explore the various methods of graphical representation that can be used to analyze one variable data. Graphical representation is an effective way to visually display data and gain insights from it.

Bar graphs: One common method of graphical representation is the use of bar graphs. Bar graphs are used to compare categorical data by representing each category as a bar and the height of the bar indicating the frequency or percentage of that category.

Pie charts: Another method of graphical representation is the use of pie charts. Pie charts are used to represent the proportions of different categories within a whole. Each category is represented as a slice of the pie, with the size of the slice indicating the proportion of that category.

Line graphs: Line graphs are used to represent the relationship between two variables. One variable is plotted on the x-axis and the other on the y-axis. By connecting the data points with a line, we can observe the trend or pattern between the two variables.

Histograms: Histograms are used to represent the distribution of numerical data. The data is divided into intervals or bins, and the height of each bar indicates the frequency or percentage of data points that fall within that interval. Histograms are useful in identifying the shape and central tendency of a dataset.

In conclusion, graphical representation of data allows us to visually analyze and interpret one variable data. Bar graphs, pie charts, line graphs, and histograms are some of the common methods used for graphical representation. These graphical representations provide a concise and clear visualization of the data, making it easier to understand and draw insights from the data.

Section 6: The Normal Distribution

The normal distribution, also known as the Gaussian distribution or bell curve, is an important statistical concept that is widely used in various fields such as psychology, economics, and engineering. It is a continuous probability distribution that is characterized by its bell-shaped curve.

The normal distribution is defined by its mean and standard deviation. The mean represents the center of the distribution and the standard deviation determines the spread of the data around the mean. In a normal distribution, 68% of the data falls within one standard deviation of the mean, 95% falls within two standard deviations, and 99.7% falls within three standard deviations.

The normal distribution has several properties:

- It is symmetric, with the mean, median, and mode all being equal.

- It is unimodal, with a single peak at the mean.

- It is asymptotic, meaning that the tails of the distribution approach but never reach the x-axis.

- It is defined for the entire range of real numbers, from negative infinity to positive infinity.

Normal distribution is widely used for:

- Statistical inference: Many statistical tests and models assume that the data is normally distributed.

- Quality control: It is used to define control limits and identify outliers in manufacturing processes.

- Approximation: Many natural phenomena and data sets can be approximated by a normal distribution.

- Probability calculations: The properties of the normal distribution allow for precise calculations of probabilities.

The normal distribution is an important concept in statistics and understanding its properties and applications can greatly enhance data analysis and decision-making. By analyzing data using the normal distribution, we can make accurate predictions, perform statistical tests, and gain insights into various aspects of the population.

Section 7: Probability and Statistics

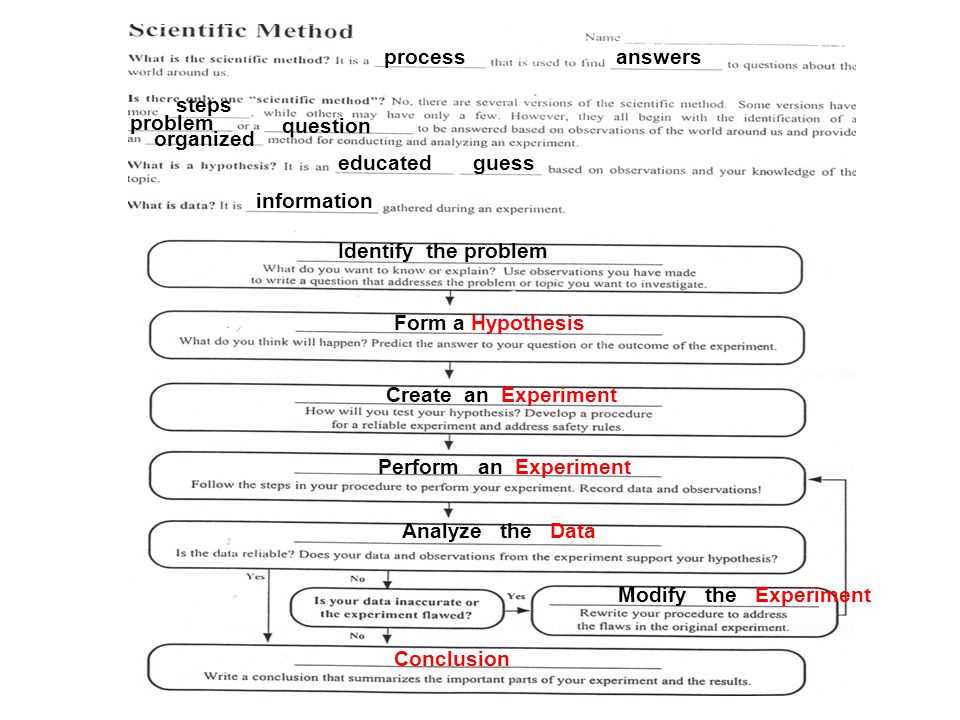

In Section 7, we explore the concepts of probability and statistics. Probability is the study of uncertainty and the likelihood of events occurring. It plays a vital role in many fields, including finance, insurance, and weather forecasting. Statistics, on the other hand, involves the collection, analysis, interpretation, presentation, and organization of data. These two fields are closely related and are essential in making informed decisions.

Probability

Probability is the branch of mathematics that deals with the likelihood of events occurring. It provides a framework for predicting the outcomes of experiments or events. In the context of statistics, probability plays a crucial role in determining the likelihood of different outcomes and helps us make informed decisions based on available data.

Key concepts:

- Sample space: The set of all possible outcomes of an experiment.

- Event: A set of outcomes from the sample space.

- Probability of an event: The likelihood of an event occurring, usually expressed as a number between 0 and 1.

- Probability distribution: A function that assigns probabilities to different events or outcomes.

Statistics

Statistics is the discipline that involves the collection, analysis, interpretation, presentation, and organization of data. It provides tools and techniques for making sense of large amounts of information and drawing conclusions from the data. Statistics is used in a wide range of fields, including business, healthcare, social sciences, and engineering.

Key concepts:

- Data: Information collected or observed from different sources.

- Descriptive statistics: Methods used to summarize and describe the main features of a dataset.

- Inferential statistics: Techniques used to make inferences or predictions about a population based on a sample.

- Hypothesis testing: The process of making and evaluating claims or statements about a population based on sample data.

In conclusion, probability and statistics are integral parts of data analysis. Understanding these concepts enables us to make informed decisions, draw meaningful conclusions, and make predictions based on available data.