Statistics is a branch of mathematics that involves the collection, analysis, interpretation, and presentation of data. It plays a crucial role in various fields, including business, healthcare, social sciences, and more. In order to effectively understand and apply statistical concepts, it is essential to master the foundational knowledge covered in Chapter 1.

This article provides comprehensive answers to the Chapter 1 test questions, ensuring that readers have a thorough understanding of the key concepts discussed in the chapter. By delving into topics such as data collection methods, types of variables, measures of central tendency, and measures of variation, this guide aims to clarify any confusion and solidify the understanding of statistics.

By reviewing the answers to the Chapter 1 test questions, readers will gain valuable insights into the fundamental principles of statistics. They will learn how to differentiate between qualitative and quantitative data, grasp the importance of accurate data collection, and master the calculation and interpretation of various statistical measures. Whether you are a student preparing for an exam or an individual seeking a better understanding of statistics, this guide is a valuable resource.

Key Concepts in Statistics

In the field of statistics, there are several key concepts that are essential for understanding and analyzing data. These concepts provide the foundation for interpreting and drawing conclusions from numerical information. By understanding these concepts, individuals can make informed decisions and evaluate the validity of statistical claims.

1. Variables:

A variable is a characteristic or attribute that can be measured or observed. It can take on different values for different individuals or objects. Variables can be classified as either categorical or numerical. Categorical variables represent characteristics that can be divided into different categories or groups, such as gender or occupation. Numerical variables, on the other hand, represent characteristics that can be measured on a continuous or discrete scale, such as height or income.

2. Population and Sample:

In statistics, a population refers to the entire group of individuals or objects that we are interested in studying. It is often not feasible or practical to collect data from an entire population, so we usually work with a sample. A sample is a subset of the population that is selected to represent the population as a whole. The goal is to obtain a sample that is representative of the population to ensure that the conclusions drawn from the sample can be generalized to the population.

3. Descriptive Statistics:

Descriptive statistics involve summarizing and presenting data in a meaningful way. This includes measures such as the mean, median, and mode, which provide information about the central tendency of a dataset. Descriptive statistics also include measures of variability, such as the range and standard deviation, which indicate the spread or dispersion of the data. These statistics help us understand the characteristics of a dataset and provide insights into its distribution and shape.

4. Inferential Statistics:

Inferential statistics involve using sample data to make inferences or draw conclusions about a population. By analyzing sample data and applying probability theory, inferential statistics allow us to estimate population parameters, test hypotheses, and make predictions. This branch of statistics helps us generalize from a sample to a larger population and make reliable statements about the population based on limited information.

5. Hypothesis Testing:

Hypothesis testing is a statistical procedure used to make decisions or draw conclusions about a population based on sample data. It involves formulating a hypothesis, collecting and analyzing data, and evaluating the evidence against the hypothesis. The goal is to determine whether the data provide enough evidence to support or reject the hypothesis. This process helps researchers make evidence-based decisions and ensures the validity and reliability of statistical claims.

In conclusion, understanding key concepts in statistics is essential for analyzing and interpreting data. These concepts, such as variables, populations and samples, descriptive and inferential statistics, and hypothesis testing, provide the necessary tools for making informed decisions and drawing accurate conclusions. By applying these concepts, individuals can navigate the complex world of data and use statistical analysis to inform their decision-making processes.

Descriptive Statistics: Chapter 1 Test Answer Key

In Chapter 1 of the Statistics course, students were introduced to the concept of descriptive statistics. These statistical methods are used to summarize and analyze data, providing meaningful insights into the information at hand. The Chapter 1 test was designed to assess students’ understanding of these concepts and their ability to apply them in practical scenarios.

The answer key for the Chapter 1 test is provided below, outlining the correct answers and explanations for each question:

- Question: What is the purpose of descriptive statistics?

Answer: Descriptive statistics help us summarize and describe the main features of a dataset, such as central tendency (mean, median), dispersion (range, standard deviation), and shape (skewness, kurtosis). - Question: How is the mean calculated?

Answer: The mean is calculated by summing up all the values in a dataset and dividing the sum by the total number of values. It represents the average value of the dataset. - Question: What is the median?

Answer: The median is the middle value in a dataset when it is sorted in ascending order. It represents the value that divides the dataset into two equal halves. - Question: How is the range calculated?

Answer: The range is calculated by subtracting the smallest value in a dataset from the largest value. It represents the spread or variability of the dataset. - Question: What is the standard deviation?

Answer: The standard deviation measures the average amount of deviation or dispersion of values from the mean. It provides insights into how spread out the data is.

By understanding and correctly answering these questions, students demonstrate their grasp of the fundamental concepts of descriptive statistics. This knowledge will serve as a foundation for further exploration and application of statistical methods throughout the course.

Measures of Central Tendency

Measures of central tendency refer to statistical measures that describe the center or average of a data set. These measures provide an understanding of the typical value or center around which the data tends to cluster. The three main measures of central tendency are the mean, median, and mode.

Mean: The mean is calculated by summing up all the values in a data set and dividing the sum by the total number of observations. It represents the average value of the data set. The mean is sensitive to extreme values, as these outliers can significantly impact the overall average.

Median: The median is the middle value in a data set when arranged in ascending or descending order. If there is an even number of observations, the median is the average of the two middle values. The median is not affected by extreme values and provides a measure of central tendency that is more resistant to outliers.

Mode: The mode is the value or values that occur most frequently in a data set. It represents the peak or most typical value. A data set can have multiple modes or no mode at all if no value is repeated. The mode can be useful in determining the most common value or category within the data set.

In summary, measures of central tendency are statistical tools that provide insight into the typical value or center of a data set. The mean, median, and mode each offer a unique perspective on the central tendency, and their selection depends on the nature of the data and the purpose of the analysis.

Measures of Dispersion

Measures of dispersion are used to understand how spread out or concentrated a set of data is. They provide important information about the variability within a dataset.

One commonly used measure of dispersion is the range, which is the difference between the maximum and minimum values in a dataset. The range is simple and easy to calculate, but it can be sensitive to extreme values. For example, if there is one extremely high or low value in the dataset, it can significantly inflate or deflate the range.

A more robust measure of dispersion is the interquartile range (IQR). The IQR is calculated by finding the difference between the first quartile (25th percentile) and the third quartile (75th percentile) of the data. It is less affected by extreme values than the range. The IQR provides a measure of the spread of the middle 50% of the data.

Another commonly used measure of dispersion is the variance. Variance quantifies the average deviation of each data point from the mean of the dataset. It takes into account all the values in the dataset rather than just the maximum and minimum values. The variance is calculated by taking the average of the squared differences between each data point and the mean. A high variance indicates a wide spread of values, while a low variance suggests a more concentrated dataset.

Standard deviation is another popular measure of dispersion, closely related to variance. It is the square root of the variance and provides a measure of the average deviation from the mean. The standard deviation is often used as a way to express the spread of data in the same units as the original data.

- Range: difference between the maximum and minimum values in a dataset

- Interquartile range (IQR): difference between the first quartile and third quartile of the data

- Variance: average deviation of each data point from the mean

- Standard deviation: square root of the variance

These measures of dispersion are essential tools in statistical analysis, as they help to provide a comprehensive understanding of the spread and variability within a dataset. They are often used in conjunction with measures of central tendency, such as the mean or median, to provide a complete description of a dataset.

Probability and Statistics: Chapter 1 Test Answer Sheet

In the chapter 1 test, students were required to demonstrate their understanding of probability and statistics concepts and apply them in various scenarios. The test answer sheet showcases their proficiency in calculating probabilities, analyzing data, and interpreting results.

The first section of the test focused on basic probability concepts. Students were asked to determine the probability of an event occurring given limited information. They were required to analyze the given data to calculate probabilities, considering both theoretical and experimental approaches. The answers demonstrated their ability to apply mathematical formulas and reasoning skills to solve probability problems.

The second section of the test delved into statistical analysis. Students were presented with a set of data and were asked to calculate various measures of central tendency, such as mean, median, and mode. They also had to analyze the spread of the data by calculating the range and interquartile range. The test answer sheet revealed their ability to perform these calculations accurately and interpret the results in the context of the data provided.

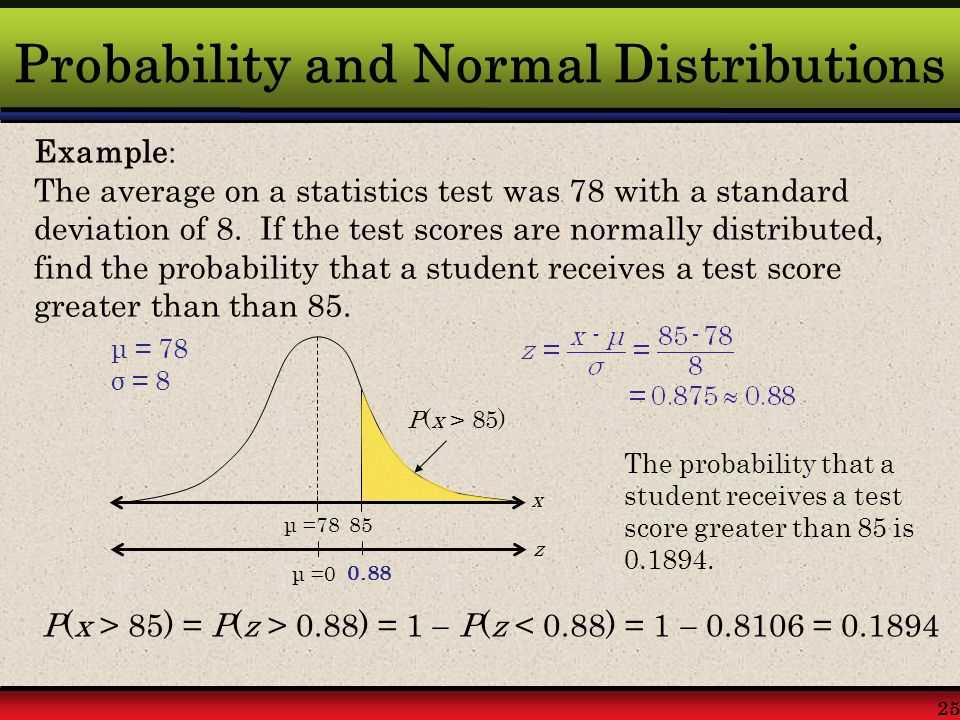

Furthermore, the test included questions related to probability distributions, including the binomial and normal distributions. Students were asked to apply the appropriate formulas and concepts to calculate probabilities and analyze the characteristics of these distributions. Their answers showcased their understanding of these distributions and their ability to perform calculations involving them.

In conclusion, the chapter 1 test answer sheet provided an insight into students’ comprehension and application of probability and statistics concepts. It demonstrated their ability to calculate probabilities, analyze data, and interpret results effectively. These foundational skills will serve as a strong basis for future statistical analysis and further exploration of probability concepts.

Basic Probability Concepts

In statistics, probability is a measure of how likely it is that a particular event will occur. It is used to quantify uncertainty and is an essential concept in the field of probability theory. By understanding basic probability concepts, statisticians are able to make informed decisions and predictions based on the likelihood of different outcomes.

1. Sample Space: The sample space refers to the set of all possible outcomes of an experiment or event. It is denoted by the symbol S. For example, when tossing a fair coin, the sample space consists of two possible outcomes: heads or tails.

2. Event: An event is a subset of the sample space, which consists of one or more outcomes. It is denoted by a capital letter, such as A, B, or C. For example, if we define event A as getting a head when tossing a fair coin, then A = {H}, where H represents the outcome of getting a head.

3. Probability: Probability is a numerical measure ranging from 0 to 1 that represents the likelihood of an event occurring. The probability of an event A happening is denoted by P(A). For example, the probability of getting a head when tossing a fair coin is 0.5.

4. Union and Intersection: The union of two events A and B, denoted by A ∪ B, represents the set of outcomes that belong to either A or B or both. The intersection of two events A and B, denoted by A ∩ B, represents the set of outcomes that belong to both A and B. These concepts are used to determine the probability of combined events.

- Complement: The complement of an event A, denoted by A’, represents the set of outcomes that do not belong to A. It is calculated by subtracting the probability of A from 1. For example, if the probability of event A is 0.3, then the probability of event A’ is 1 – 0.3 = 0.7.

- Conditional Probability: Conditional probability is the probability of an event occurring given that another event has already occurred. It is denoted by P(A|B), where A is the event of interest and B is the event that has already occurred. Conditional probability is used to calculate probabilities in situations where events are not independent.

These basic probability concepts form the foundation of statistical analysis and help statisticians make sense of uncertainty in real-world scenarios. By understanding the sample space, events, and probabilities, statisticians can make informed decisions based on data and probabilities. Probability theory is used in various fields, including finance, engineering, biology, and social sciences, to analyze and predict outcomes.